I woke up last Saturday and saw the below tweet by Stella Cannefax where she announced the 1.0 release of her OSC Library (compatible with Windows, MacOS, Android, and iOS.) I immediately needed to try and build something! To my delight, it took very little of my Saturday morning to integrate OSC components into the AR music interfaces of Broken Place AR.

OSC and why it matters

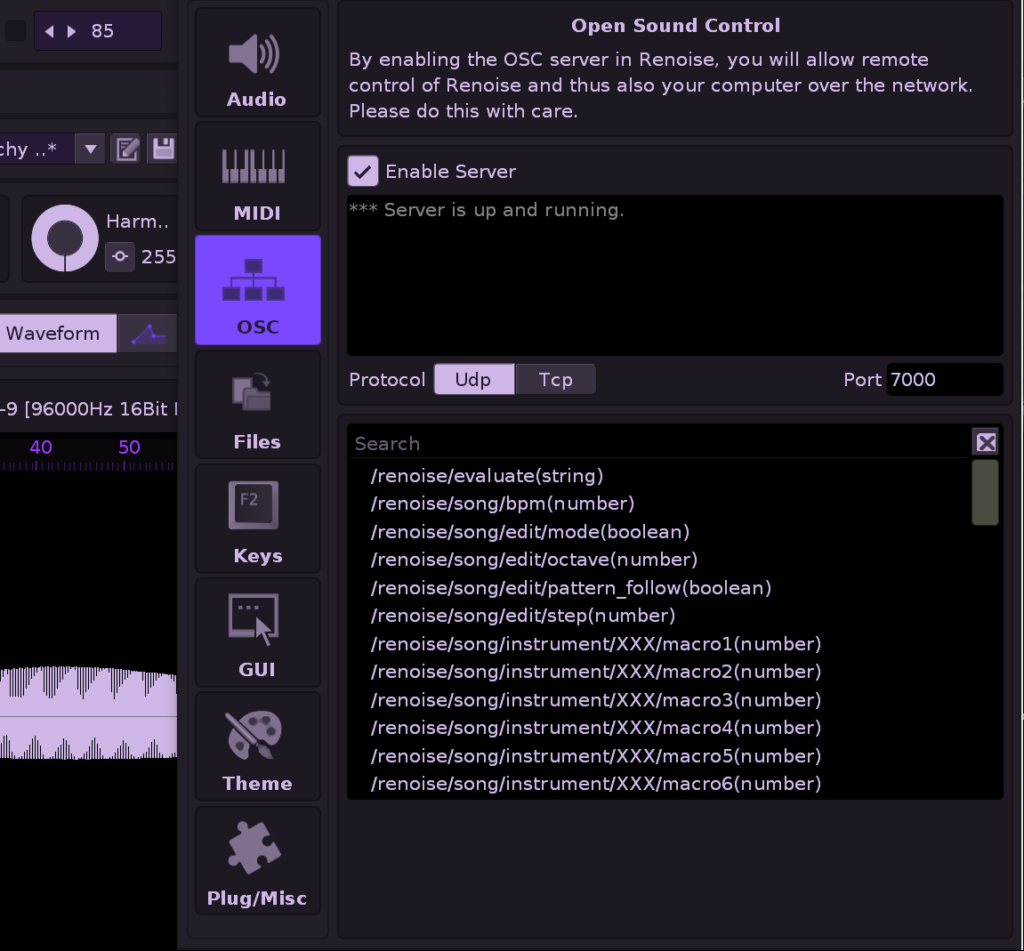

Open Sound Control is a very popular messaging protocol with high fidelity options in broadcasting midi-like information. It is typically used in music production/performances, visual experiences, and so much more. For the sake of this morning project, I focused on making an AR controller for the Renoise music software. Renoise natively supports Open Sound Control and exposes much of the project control parameters through various routes. Below is a screenshot of the message options allowed in Renoise. You may notice that the messages available are very simple routes, for instance in /renoise/song/bpm , BPM is given a value via the protocol; in this case numbers.

In the previous example of /renoise/song/bpm the final parameter is listening for a certain value type, often a boolean or a number. Lucky for us, those types of values are very easy to find in Unity components and translate using the handy OscCore components. 😈

Getting started with OscCore in Unity:

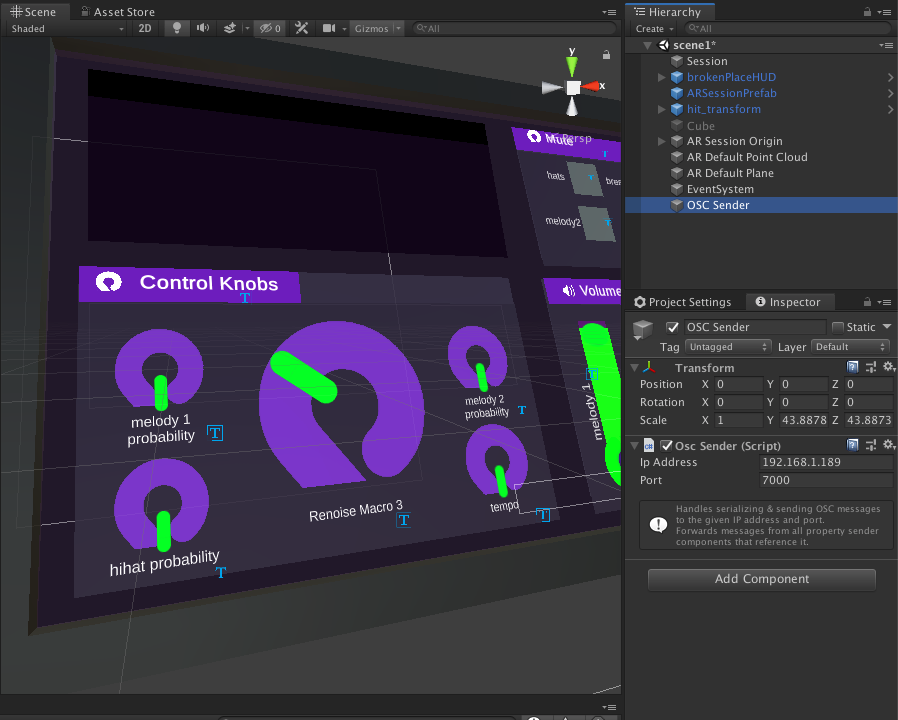

OscCore highlights that it is tiny and I think the greatest testament to that is how fast it was to configure. The system requires two things, a network object that is handling the sending of messages, and an object to define messages or routes to send values to. The later is what maps your game object values to a desired OSC message. Below is a screenshot of the primary “network” component assigned to it’s own root level game object in a standard AR Foundation scene. (Note, I’m using iOS build target.)

The OSC Sender component allows you to set the IP Address and ports that messages will be broadcast to. I plan to expose this option via player prefs in a later version of this project so that the user can define the IP address of their target computer. It’s important to note, if using this in a public setting, you should create your own private network to connect both your app and host computer. When you have both devices on the same safe network, you can find your target computer’s IP address in your system network settings and configure the component accordingly. This is where having a player pref option to set the IP will come in handy. ;D

Sending messages with OscCore

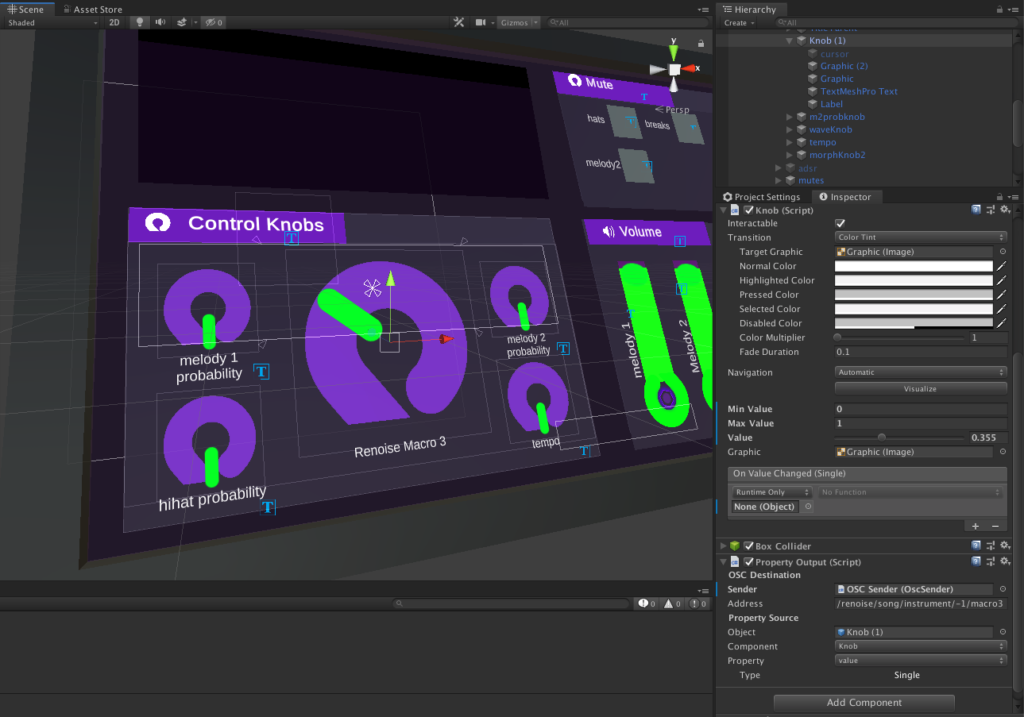

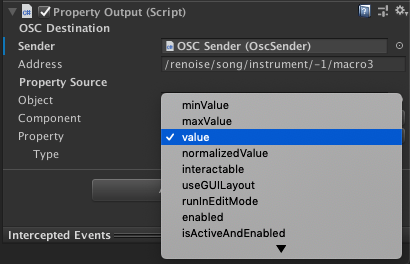

Messages can be sent to a defined route using the Property Output component. This component is what maps a defined value of a component, in my case a VJUI knob, to a given route. I recommend placing these components on the same object that it is referencing. In my experimentation, I had a top level game object referencing multiple knobs and because of how my AR Foundation scene instantiated the prefab, it lost the references. So yeah, attach the component to the thing it is watching if it makes sense.

Below we have a close up screenshot of the Property Output component. You’ll notice that the component references the OSC Sender component, of which will be sending the messages defined. In the example of my knob, the Property Output component is attaching the /renoise/song/instrument/-1/macro3 route to the Knob’s value parameter. You may notice immediately that changing the value parameter sends the messages to the target app. Below are also some video tweets that show off my working proof of concept. Yay!

Where to take this project?

Well shoot, I have a new fun project to chip away at! Something I want to try next is targeting two separate devices from the single AR app. Imagine that you have a computer hooked to a projector glitching a feed of the AR app’s perspective and another computer on a stage performing music. With a single controller, the performer can be in both places at the same time and maybe even unify the sound controls with visuals by attaching multiple Property Output components to a single UI component. I have so many ideas of things to build with OscCore. You should check it out and contribute back if you find improvements! I highly recommend giving the project’s README a glance before getting started. It gets deep into the code and makes my mind race with ideas. 😀 Thanks Stella!

Anthony Burchell

Founder, Broken Place